Let’s be honest: if you’ve shopped on Amazon in the last few years, you’ve probably wondered, “Are these reviews for real?” Maybe you’ve seen a $20 Bluetooth speaker with 5,000 glowing five-star reviews, or a vitamin supplement with a suspiciously poetic fanbase. Enter ReviewMeta.com—a tool that promises to cut through the noise and help you spot the fakes. But does it actually work? And how does it stack up against rivals like Mozilla’s Fakespot (which we last heard is going to be winded up soon)? Let’s dig in.

The Promise: ReviewMeta’s Approach

ReviewMeta’s pitch is simple: paste an Amazon product URL, and it’ll analyze the reviews, flagging anything that looks fishy. The site runs a battery of tests—checking for unverified purchases, repetitive phrases, “one-hit wonder” reviewers, and more. It then spits out an “adjusted rating,” supposedly reflecting only the trustworthy reviews.

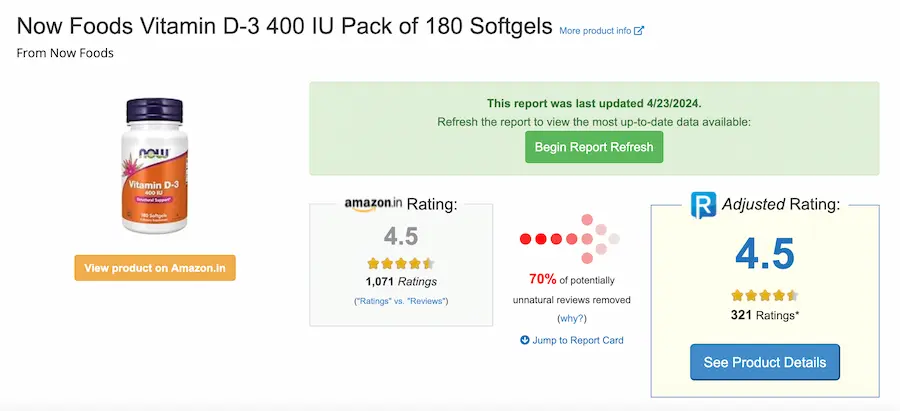

Take, for example, the recent report on Now Foods Vitamin D-3 400 IU. Out of 1,071 ratings, ReviewMeta flagged 70% as “potentially unnatural.” The adjusted rating? Still 4.5 stars, but based on just 321 reviews. The report card is a mixed bag: it fails the “Unverified Purchases” and “Suspicious Reviewers” tests, but passes on rating trends, word count, and more. It’s a classic case of ReviewMeta’s nuanced, sometimes confusing, approach.

The Pros: Transparency and Detail

One thing I genuinely appreciate about ReviewMeta is its transparency. Unlike some black-box algorithms, it shows its work. You can see exactly why a review was flagged—maybe the reviewer only ever posted one review, or maybe they used the phrase “5.0 out of 5 stars” a suspicious number of times. For data nerds (guilty as charged), this is gold.

Forum users on Reddit’s r/Frugal and r/LifeProTips echo this sentiment. “It isn’t perfect but it is significantly more reliable [than Fakespot],” wrote one user, adding that ReviewMeta’s breakdowns help you make your own call rather than just taking the site’s word for it. That’s a big deal in a world where trust is in short supply.

Another plus: ReviewMeta doesn’t just nuke all suspicious reviews. It gives you a sense of scale—how many reviews are potentially fake, and how much they might be skewing the rating. Sometimes, as in the Vitamin D-3 example, the adjusted rating barely changes. Other times, it drops like a rock. That nuance is important.

The Cons: False Positives and Overzealous Filters

But here’s the rub: ReviewMeta’s system isn’t perfect. In fact, it can be a little too eager to flag reviews as “unnatural.” Author David Gaughran, for instance, did a deep dive and found that ReviewMeta flagged a bunch of his book’s reviews as suspicious simply because readers reviewed multiple books in a series or mentioned the book title—totally normal behavior for fans. That’s a classic “false positive,” and it’s not just limited to books.

I’ve seen it myself. I once checked a kitchen gadget I genuinely loved, only to find ReviewMeta slapping a big “FAIL” on the report card. Turns out, a bunch of reviewers had only ever reviewed that one product. But for niche items, that’s not so weird—sometimes people just don’t write a lot of reviews!

Redditors and Quora users have similar gripes. “It’s a useful tool, but you have to use your own judgment,” says one Quora answer. “Sometimes it flags stuff that’s just… normal.” The consensus: ReviewMeta is a good second opinion, not a final verdict.

The BoAt Rockerz 550: A Case Study in ReviewMeta vs. Fakespot

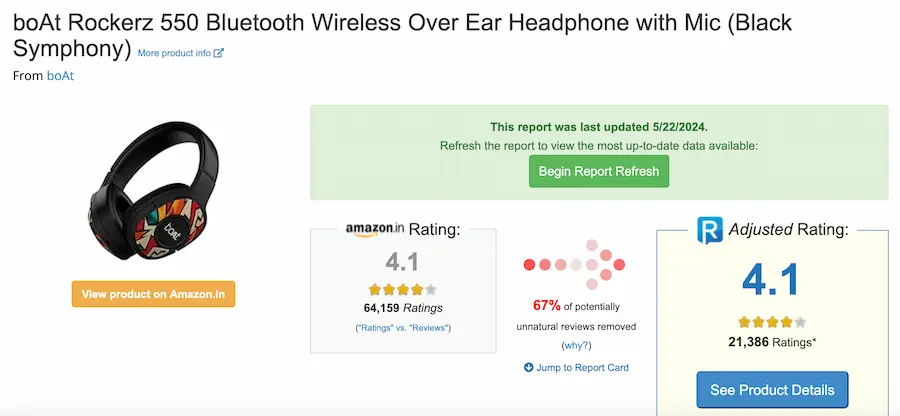

To really get a feel for how these tools work in the wild, let’s look at a massively popular product: the boAt Rockerz 550 Bluetooth headphones. With over 64,000 Amazon ratings, this is the kind of product that’s a magnet for both genuine fans and, let’s be honest, a few review shenanigans.

ReviewMeta’s Take: Red Flags Galore, But the Rating Holds

Plugging the boAt Rockerz 550 into ReviewMeta, you get a report that’s… well, let’s just say it’s not a glowing endorsement of the review ecosystem. The site removes a whopping 67% of “potentially unnatural” reviews, yet the adjusted rating stays at 4.1 stars—exactly the same as the original. That’s a little mind-bending, right? You’d expect a nosedive, but nope.

But dig into the details and you see why ReviewMeta is so polarizing. The report card is a sea of red:

- FAIL for Deleted Reviews (9 reviews vanished, which always raises eyebrows)

- FAIL for Suspicious Reviewers (a staggering 85% of reviewers are “one-hit wonders”—they’ve only ever reviewed this product)

- FAIL for Phrase Repetition (about 60% of reviews use substantial repetitive phrases, which is a classic sign of review stuffing)

- WARN for Rating Trend (a chunk of reviews came in during a short burst, which can mean a coordinated push)

Yet, the site passes the product on unverified purchases, word count, overlapping review history, and incentivized reviews. The average “ease score” for reviewers is a bit lower than the category average, which could mean these reviewers are a little more critical—or just less experienced.

What’s fascinating is that, despite all these warning signs, the adjusted rating doesn’t budge. This is where ReviewMeta’s transparency is both a blessing and a curse: you see all the sausage being made, but sometimes the end result feels counterintuitive.

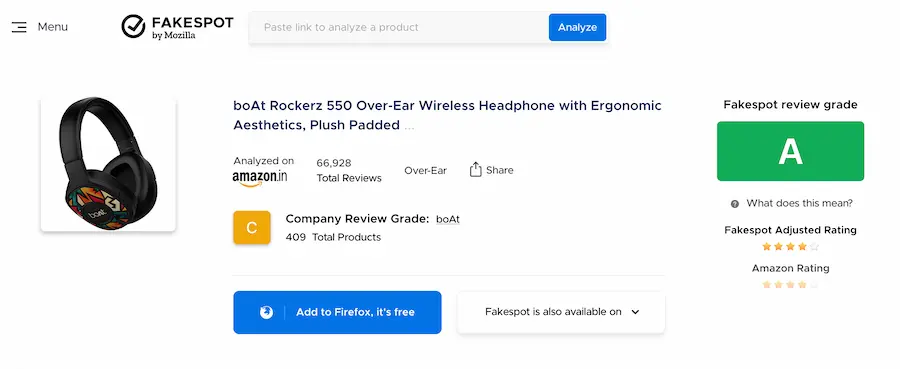

Fakespot’s Take: A Softer, Simpler Verdict

Now, let’s see what Fakespot has to say. Fakespot gives the boAt Rockerz 550 an overall review grade of “A”—a pretty solid score. Their AI suggests there’s minimal deception involved, and the adjusted rating is basically the same as Amazon’s. Fakespot’s summary is more about the product’s pros and cons, with a focus on sound quality and build durability. They do note that Amazon has altered or removed a huge number of reviews (over 72,000!), but their engine still finds “over 90% high quality reviews are present.”

Fakespot’s approach is less granular than ReviewMeta’s. It doesn’t show you the nitty-gritty of why reviews were flagged, but it does give you a quick, digestible answer. For a lot of shoppers, that’s enough. For others, it feels a bit like being told “don’t worry about it”—which, if you’re a skeptic, isn’t super reassuring.

What the Forums Say: Real-World Frustrations

If you poke around Reddit, Quora, or even Amazon’s own forums, you’ll find plenty of people who’ve run these kinds of products through both tools. The consensus? Both ReviewMeta and Fakespot are useful, but neither is gospel. One Redditor summed it up perfectly: “ReviewMeta shows you the math, Fakespot gives you the answer. Sometimes they agree, sometimes they don’t. I use both, but I still read the reviews myself.”

There’s also a lot of chatter about “one-hit wonders.” For a product like the boAt headphones, which is a budget bestseller in India, it’s not shocking that many buyers don’t review anything else. That’s not necessarily a sign of fraud—it could just be a reflection of the customer base. But ReviewMeta’s algorithm doesn’t know that, so it throws up a red flag.

The Human Element: Why Context Matters

Here’s where the human touch comes in. Algorithms are great at spotting patterns, but they’re not so hot at understanding context. Maybe a product gets a flood of reviews because it went viral on TikTok, or because a big sale just ended. Maybe a bunch of reviews use the same phrase because the brand’s marketing is everywhere, or because people in a certain region use similar language.

I’ve personally bought products that ReviewMeta flagged as “failures,” only to find them perfectly legit. And I’ve seen Fakespot give an “A” to stuff that was clearly being astroturfed. The tools are helpful, but they’re not infallible.

Fakespot vs. ReviewMeta: The Rivalry

Of course, ReviewMeta isn’t the only game in town. Fakespot is its main rival, and the two have a bit of a Coke vs. Pepsi thing going on. Fakespot is more aggressive—it’ll slap a big grade on a product if it thinks the reviews are dodgy, and it recalculates the star rating accordingly. Some users love the simplicity; others find it heavy-handed.

On Bogleheads and Reddit, the debate rages. “Fakespot used to be great but now is completely unreliable,” says one Redditor, “I recommend using ReviewMeta.com.” Others say Fakespot is faster and easier for a quick check, but less transparent about its methods. There’s also the issue of Fakespot sometimes flagging legitimate products, especially from smaller brands, which can be frustrating for both buyers and sellers.

A 2025 Demand Sage report notes that 72% of consumers believe fake reviews are becoming the norm, and both Fakespot and ReviewMeta are part of the arms race to restore trust. But neither is perfect. Fakespot’s grades can be a blunt instrument, while ReviewMeta’s detailed reports can be overwhelming or misleading if you don’t know what you’re looking at.

Other Platforms: The Wild West

There are a few other players—like TheReviewIndex and even Amazon’s own “Verified Purchase” badge—but none have the reach or reputation of Fakespot and ReviewMeta. Some browser extensions promise to flag fake reviews in real time, but they’re often based on similar algorithms and face the same challenges: false positives, missed fakes, and the ever-evolving tactics of review spammers.

Real-World Examples and Expert Takes

Let’s get real: fake reviews are a massive problem. A Capital One Shopping study found that up to 47% of Amazon reviews were considered fake or ungenuine in 2020, though that number has dropped as Amazon cracks down. Still, with billions of reviews, even a small percentage is a big deal.

Tommy Noonan, ReviewMeta’s founder, told BuiltIn, “I shop on Amazon and for the most part trust the reviews,” but he admits that only about 7–11% of products show a pattern of low-quality reviews (source). That’s not nothing, but it’s not the apocalypse either.

The Verdict: Use With Caution

So, what’s the bottom line? ReviewMeta is a powerful tool, but it’s not a magic bullet. It’s best used as part of a broader strategy: read the reviews yourself, look for patterns, and use ReviewMeta (or Fakespot) as a sanity check. Be especially wary of products with tons of five-star reviews and little detail, or where the adjusted rating drops dramatically after filtering.

And remember: false positives are real. Sometimes, a product gets flagged for reasons that have nothing to do with fakery. Don’t let a “FAIL” scare you off if the reviews seem legit to your own eyes.

Which Tool Is Best?

If you want transparency and detail, ReviewMeta is your friend. If you want a quick, blunt answer, Fakespot might be more your speed. But neither is perfect, and both can be gamed. The best tool? Your own judgment, plus a healthy dose of skepticism.

As one seasoned Amazon shopper put it on Reddit: “Trust, but verify. And then verify again.”

Final tip: Next time you’re shopping for that “miracle” supplement or the latest tech gadget, take a breath, check the reviews, run them through ReviewMeta or Fakespot, and—most importantly—trust your gut. The best defense against fake reviews is a healthy dose of skepticism and a willingness to dig a little deeper. Happy shopping!

EditorSpeak: The smartest move here would be for Amazon to step up and build something way better than what’s out there—or just scoop up these tools and actually improve them. That’s how you save shoppers a ton of time and inject some real trust back into the whole buying experience. Let’s be real, Bezos, you’ve got the resources. If you really wanted to, you could make this happen tomorrow.